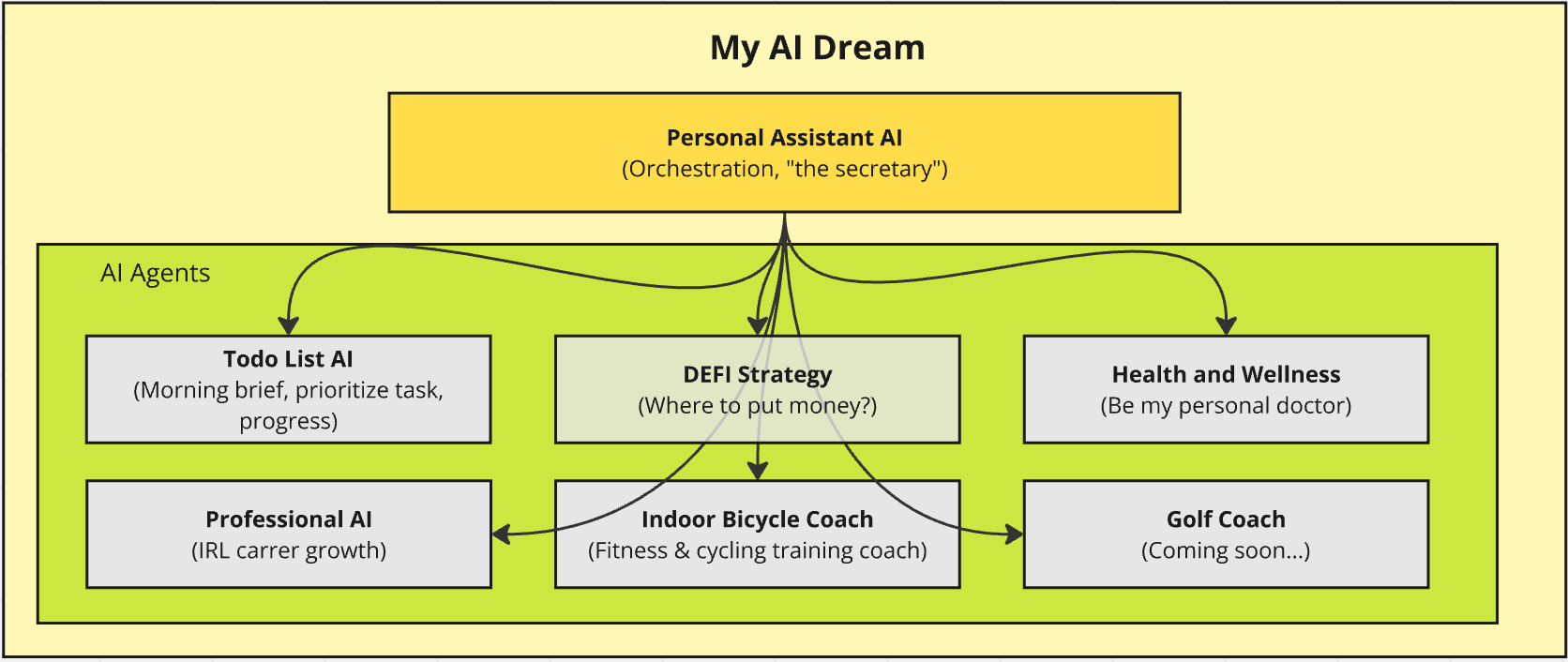

How I build my personal AI Agent

This will be a long story. The background for this is I would like to build an AI Agent that does a morning briefing at 8:30 AM every morning. This applies to both my work IRL and also my hobby projects in the evening or during weekends.

That is my initial setup, but my ultimate goal is to build an AI agent that helps me with major tasks starting from crypto airdrops, recommended DeFi strategies (where to allocate funds), to an AI indoor cycling agent that helps me reduce my weight, and a wellness AI that takes care of my health. (I am currently overweight by 20KG)

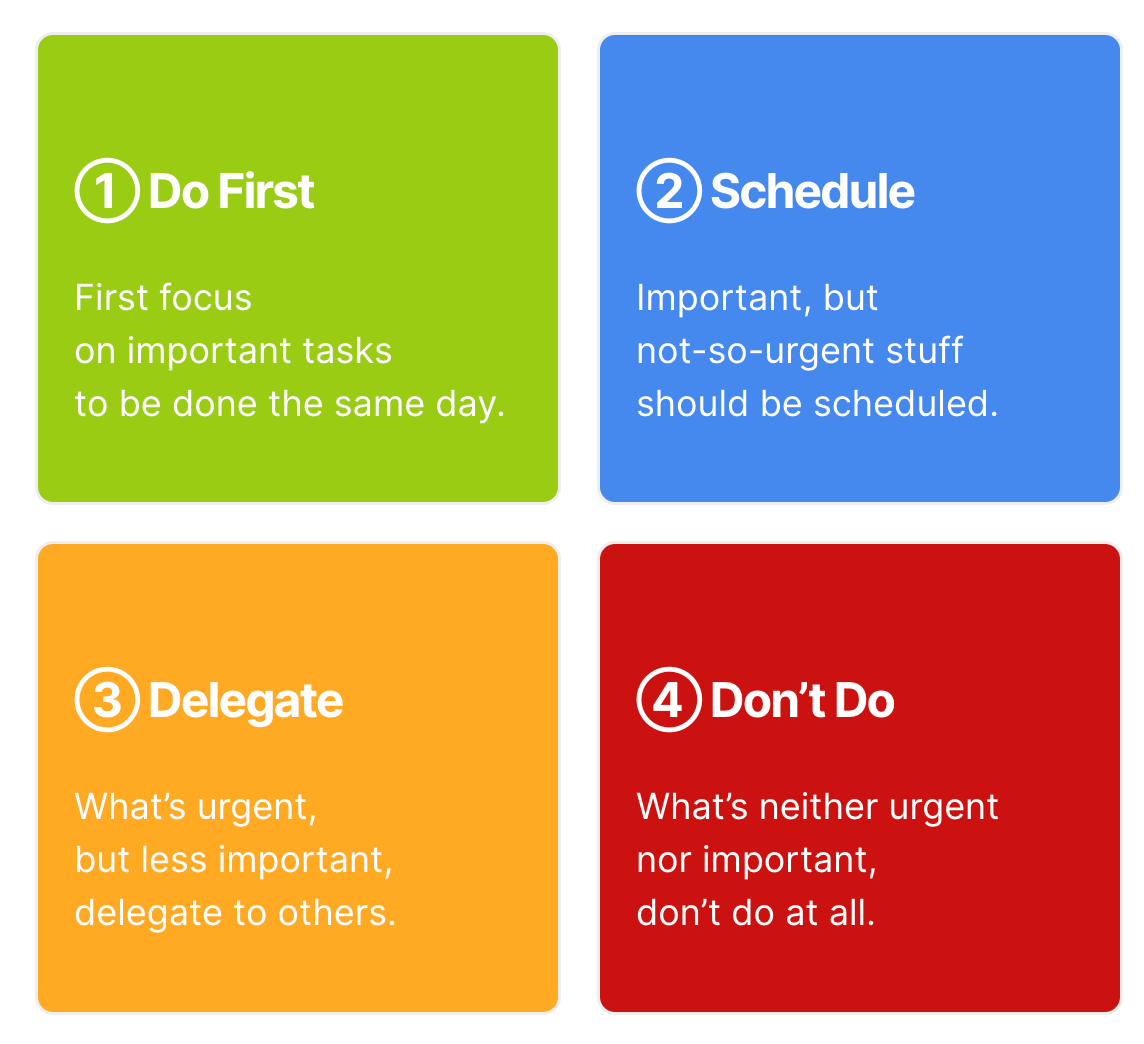

Eisenhower Matrix (Priority Matrix)

Let's start with the AI Personal Assistant. If you are the one who loves the "to-do" list, we share the same habit. I'm always happy when I can strike through an accomplished task. I use the Eisenhower Matrix. It is the simplest and most effective method for me. I recommend visiting the link so you can see how it works. It’s one of the best productivity tools I’ve used.

Left: 4 Quadrant Task List, Right: Strategy to handle each task in specific quadrant

Quick Tip:

Start by sorting tasks into the four boxes (left picture). They can shift boxes as priorities and timing change. Use two questions only:

- Importance: low or high?

- Urgency: low or high?

When you’re done, pause and make a plan. Each quadrant has its own playbook (right picture).

I built this as a Notion database and just drop each task into a box by hand. Honestly, you don’t need AI for that. The matrix is simple: it tells you what to do first, what to focus, what to ignore, and what to delegate. That’s enough for me most days.

But I do want a little helper—a bot that sends me a morning brief on Telegram and notices my recent progress over the past 7–14 days. It should flag what’s been idle too long and what might have dependencies. And I want to add tasks quickly with a simple Telegram command. That’s it. That’s my first AI use case.

What you need?

- A VPS or Self-Host server (Ubuntu, small spec - tiny cost)

- Telegram account, Telegram Bot (free)

- OpenAI or any LLM API (most are not free)

- ChatGPT for trouble shooting (free)

Hardware and Network Preparation

First, you’ll need a machine to host this. I recommend using a VPS—it’s the easy path. I had a steep learning curve when I set up crypto nodes for airdrops on my own hardware, so self-hosting is fine for me now. The key thing is you need HTTPS to receive Telegram webhooks. If you run your stack on a VPS, that part’s simpler. For self-host with dynamic IP, I use Cloudflare Zero Trust to route HTTPS and forward it to my localhost:8000.

I’m building the tech stack with Docker Compose so it can run multiple services and stay easy to decouple later. You can check my other article for how I manage self-hosting—covering virtual machines, network setup, VPN, disk management, and more.

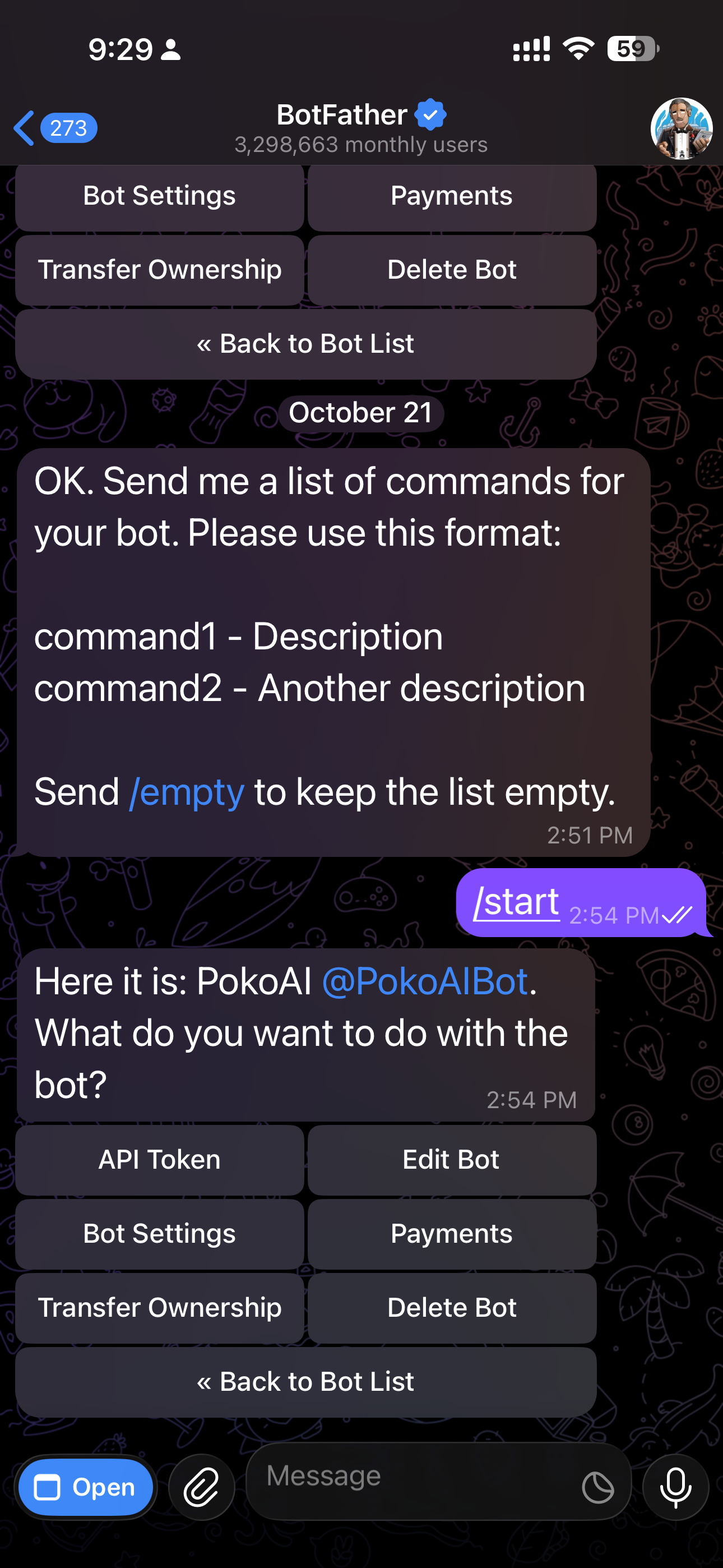

Initial Setup : API & TG Webhooks

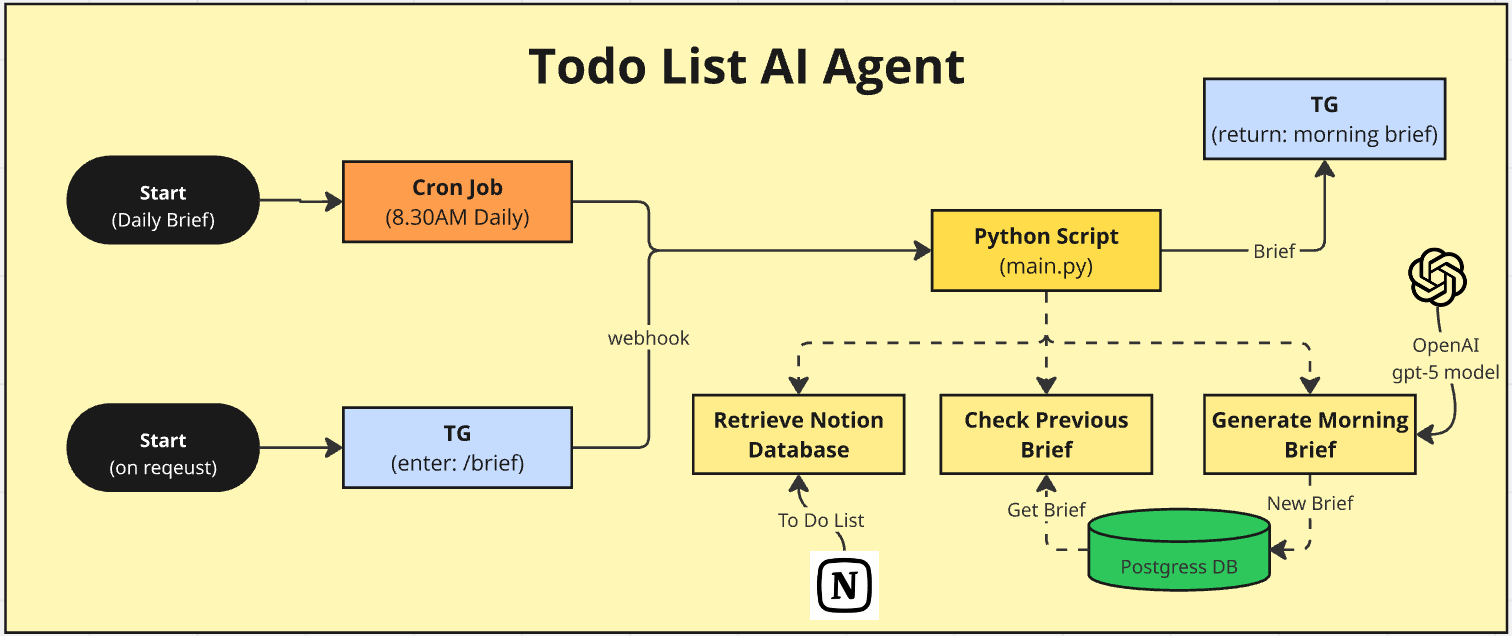

From the picture above, here what we need:

- TG bot setup is actually easy. Open Telegram, search for BotFather, and create a new bot. After that, you’ll get your bot token—keep it handy for webhook registration.

- For TG webhooks: instead of running a batch job every second to check messages, webhooks ping your Python app the moment you send a command. It’s event-driven, not polling—so it’s faster and lighter.

Here’s the webhook registration command (swap in your details):

BOT_TOKEN="<BOT_Token_FROM_BOT_FATHER>"

SERVER_URL="<ENTER_YOUR_SERVER_URL_POINT_TO_PYTHON"

SERVER_SECRET_PATH="<UUID_RANDOM_STRING_SEE_COMMAND_BELOW>"

SECRET_TOKEN="<ENTER_ANY_SECRET_WORD>"After all input are ready, just run the command below.

curl -s -X POST "https://api.telegram.org/bot$BOT_TOKEN/setWebhook" \

-d "url=$SERVER_URL/$SERVER_SECRET_PATH" \

-d "secret_token=$SECRET_TOKEN"expected output:

{

"ok": true,

"result": true,

"description": "Webhook was set"

}tip: for self_generate_any_UUID, I use this simple python command. Copy and paste all, then enter;

python3 - <<'PY'

import secrets; print(secrets.token_urlsafe(32))

PY

#you will get long random string

#example: kjdfsd0fusd09fsudf9sdjfpsdisdfjsdpofsdThat’s it—once the webhook is set, Telegram will POST updates to your endpoint instantly.

Coding - Hello World

This is the most challenging—and fun—part. Trust me, you can do it with ChatGPT. I’m a ‘25-years-ago’ developer who barely remembers more than if-then-else and for loops, and even so, ChatGPT makes the rest feel possible.

Structure

app_root

|---app

|---main.py

|---config.py

|---deps.py

|---routers

|---__init__.py

|---pa.py

|---tg.py

|---.env

|---requirements.txt

|---docker-compose.yml- .env – Environment variables for secrets and configuration.

- requirements.txt – Python dependencies pinned for the app image/build.

- docker-compose.yml – Services definition to build/run the API (and related containers).

- app/main.py – App entrypoint that creates the FastAPI instance and mounts routers.

- app/config.py – Centralized settings using pydantic-settings to load env vars.

- app/deps.py – Reusable FastAPI dependencies (e.g., header checks, auth, DB).

- app/router/ – Folder grouping HTTP route modules for cleaner organization.

- app/router/__init_.py – Just need it. Marks the folder as a Python package.

- app/router/pa.py – Routes for your “Personal AI”

- app/router/tg.py – Telegram webhook endpoint and message handling logic.

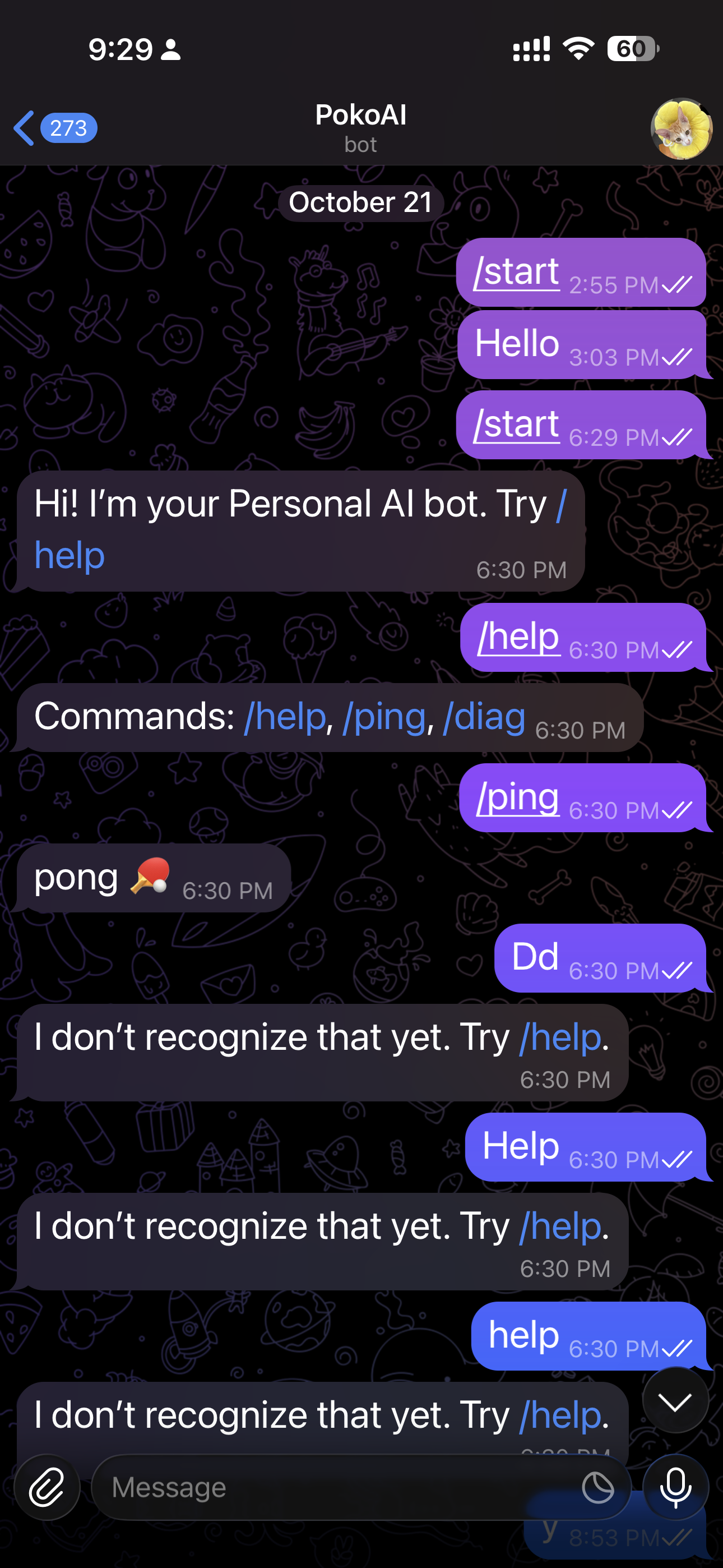

Hello World - 1st MVP

Let’s spin up our first “Hello, World” and test three things:

- We receive the webhooks

- The webhook route parses the incoming message

- We send a response back to Telegram

I’m using FastAPI to keep the API clean. Here’s the flow:

- Telegram sends a webhook to our registered URL and path. (app/routers/tg.py)

- We validate the webhook secret. Telegram includes the secret phrase we registered on every call, so we can confirm the request is legit.

- If validation passes, we extract the message body from the webhook.

- We route the message to the right handler. For now it’s simple keyword routing; later I’ll let Gen AI handle smarter intent routing.

- We send a response back to Telegram for each route.

#.env

# Telegram bot

TELEGRAM_BOT_TOKEN=<BOT_Token_FROM_BOT_FATHER>

TELEGRAM_WEBHOOK_SECRET_PATH=<SUUID_RANDOM_STRING>

TELEGRAM_WEBHOOK_HEADER_SECRET=<ENTER_ANY_SECRET_WORD>

#main.py

from fastapi import FastAPI

from app.routers import tg, pa

app = FastAPI(title="Personal AI API", version="0.1.0")

@app.get("/")

async def root():

return {"ok": True}

app.include_router(tg.router) #app/config.py

from typing import Optional

from pydantic import AnyUrl

from pydantic_settings import BaseSettings, SettingsConfigDict

class Settings(BaseSettings):

# Tell pydantic-settings to read .env and IGNORE unknown keys

model_config = SettingsConfigDict(env_file=".env", extra="ignore")

# --- general ---

ENV: str = "dev"

TZ: str = "Asia/Bangkok"

# --- URLs / DB ---

PUBLIC_BASE_URL: str # e.g. https://pa.poko.blue

# --- Telegram ---

TELEGRAM_BOT_TOKEN: str

TELEGRAM_WEBHOOK_SECRET_PATH: str # path segment in /tg/{secret}

TELEGRAM_WEBHOOK_HEADER_SECRET_: Optional[str] = None # X-Telegram-Bot-Api-Secret-Token

TELEGRAM_CHAT_ID: Optional[str] = None

settings = Settings()

# app/deps.py

from fastapi import Header, HTTPException, status

from app.config import settings

async def verify_tg_header(

x_telegram_bot_api_secret_token: str | None = Header(default=None),

):

"""

If TELEGRAM_WEBHOOK_HEADER_SECRET is set,

require Telegram to send it in header:

X-Telegram-Bot-Api-Secret-Token

"""

expected = settings.TELEGRAM_WEBHOOK_HEADER_SECRET

if not expected:

return # no check configured

if x_telegram_bot_api_secret_token != expected:

raise HTTPException(

status_code=status.HTTP_401_UNAUTHORIZED,

detail="Bad secret header",

)

# app/routers/tg.py

from fastapi import APIRouter, Request, Depends, HTTPException, BackgroundTasks

from app.deps import verify_tg_header

from app.config import settings

import httpx, asyncio

router = APIRouter()

@router.post("/tg/{secret}")

async def telegram_webhook(secret: str, request: Request, bg: BackgroundTasks, _=Depends(verify_tg_header)):

if secret != settings.TELEGRAM_WEBHOOK_SECRET_PATH:

raise HTTPException(status_code=404)

update = await request.json()

# process in background to return 200 fast (Telegram expects quick ack)

bg.add_task(process_update, update)

return {"ok": True}

async def process_update(update: dict):

msg = update.get("message") or update.get("edited_message")

if not msg or "text" not in msg:

return

chat_id = msg["chat"]["id"]

text = msg["text"].strip()

reply = route_command(text)

await send_chat_action(chat_id, "typing")

await send_message(chat_id, reply)

def route_command(text: str) -> str:

if text == "/start": return "Hi! I’m your Personal AI bot. Try /help"

if text == "/help": return "Commands: /help, /ping, /diag"

if text == "/ping": return "pong 🏓"

if text == "/diag": return "Diag OK ✅"

return "I don’t recognize that yet. Try /help."

async def send_message(chat_id: int, text: str):

url = f"https://api.telegram.org/bot{settings.TELEGRAM_BOT_TOKEN}/sendMessage"

async with httpx.AsyncClient(timeout=10) as c:

await c.post(url, json={"chat_id": chat_id, "text": text})

async def send_chat_action(chat_id: int, action: str):

url = f"https://api.telegram.org/bot{settings.TELEGRAM_BOT_TOKEN}/sendChatAction"

async with httpx.AsyncClient(timeout=5) as c:

await c.post(url, json={"chat_id": chat_id, "action": action})

Left: BotFather for set up the Token, Right: Testing the webhook and response back from Python

Make the routing logic smarter

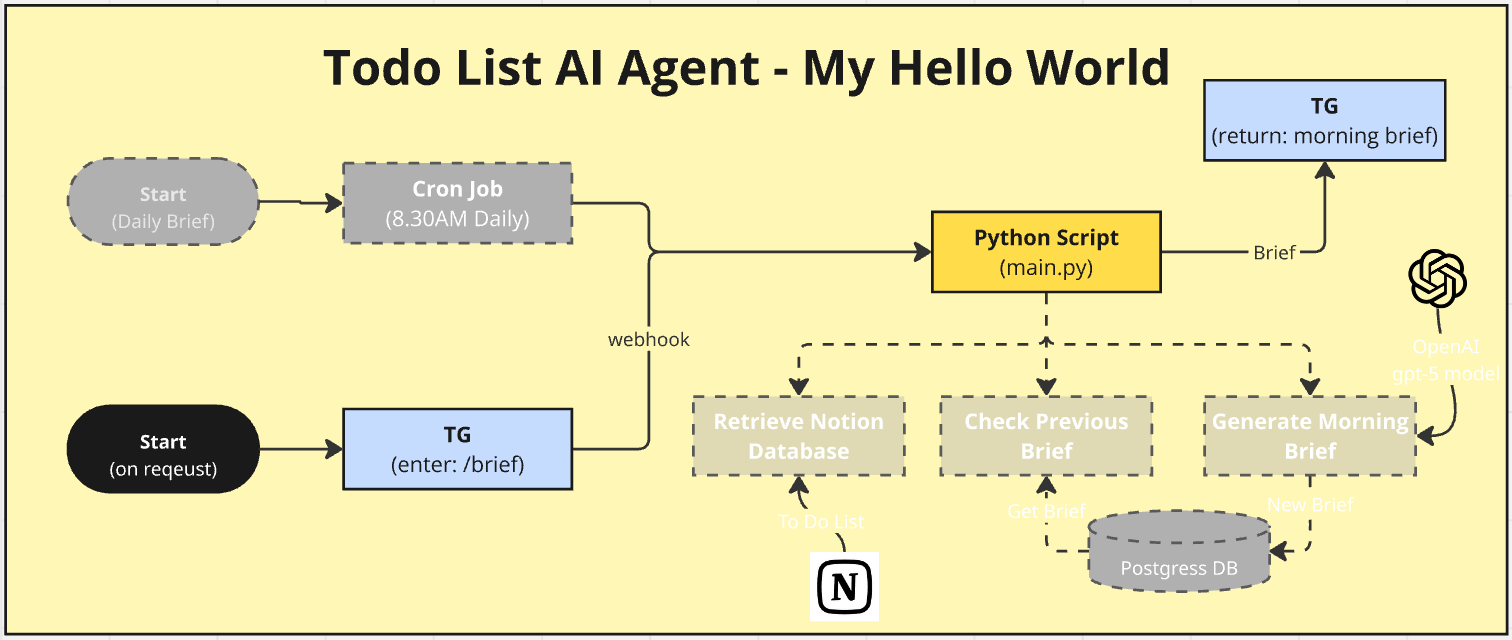

Going back to the first picture—my plan is to make a personal AI assistant as the front door to other agents. I’m upgrading the current keyword-based routing by adding an LLM to decide the route, so I don’t have to maintain fixed keywords. Let the AI handle the routing for me.

- Added libraries to

requirements.txt - Set up OPENAI config

- Created

decision_lc.pyfor routing decisions - Updated

tg.pyto use the LLM - Integrated LangSmith for model monitoring

#requirements.txt

fastapi==0.115.0

uvicorn[standard]==0.30.6

sqlalchemy[asyncio]==2.0.36

asyncpg==0.29.0

httpx==0.27.2

pydantic>=2.9.2

pydantic-settings==2.5.*

python-dotenv==1.0.1

langchain-openai==0.2.3

langchain==0.3.3

langsmith>=0.1

I added LangChain and LangSmith so Docker will install the libraries for us during the build.

Next, we setting up OPENAI API key and LangSmith

#.env

# OpenAI (used by the LangChain planner)

OPENAI_API_KEY=sk-proj-xxxxxx

MODEL_NAME=gpt-4o-mini

#Langsmith (observability)

LANGCHAIN_TRACING_V2=true

LANGCHAIN_API_KEY=lsv2_pt_xxxxxx

LANGCHAIN_PROJECT=pa-tg-routerI can’t use GPT-5 yet, but GPT-4o mini is plenty for this. For LangSmith, it’s simple: sign in on the LangSmith site, create a new project, and grab your API key. You might not see the project right away—the project and logs appear after the first call.

Next up, let’s create decision_lc.py. This is where I start using the LangFlow framework—something I want to explore and really master

# app/agents/decider_lc.py

from typing import Optional, Literal

from pydantic import BaseModel, Field

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import PydanticOutputParser

from app.config import settings

import json

def jlog(**k):

print(json.dumps(k, ensure_ascii=False), flush=True)

# The structured decision we want the LLM to return

class Decision(BaseModel):

action: Literal[

"SEND_PLAIN_REPLY",

"GET_TODAYS_BRIEF",

"GET_TASKS",

"UNKNOWN"

] = "UNKNOWN"

reason: str = Field(..., description="Short rationale.")

args: Optional[dict] = None

parser = PydanticOutputParser(pydantic_object=Decision)

SYSTEM = """You route Telegram user messages for a productivity bot.

Choose exactly ONE action.

- Greetings or generic chat => SEND_PLAIN_REPLY

- Ask for today's summary/brief/priorities => GET_TODAYS_BRIEF

- Ask to list tasks/search => GET_TASKS

- Unsure => UNKNOWN

Respond ONLY with JSON that matches the schema.

"""

prompt = ChatPromptTemplate.from_messages(

[

("system", SYSTEM + "\n\n{format_instructions}"),

("user", "{user_text}")

]

).partial(format_instructions=parser.get_format_instructions())

_llm = ChatOpenAI(

model=settings.llm_model,

temperature=0.1, # deterministic routing

api_key=settings.OPENAI_API_KEY,

)

# Build a runnable chain: prompt -> llm -> parser

chain = prompt | _llm | parser

async def decide(user_text: str) -> Decision:

# LangSmith tracing will capture this call if LANGCHAIN_TRACING_V2=true

try:

return await chain.ainvoke({"user_text": user_text})

except Exception:

jlog(event="llm_router_exception", error=str(e), model=settings.model_name)

jlog(stack=traceback.format_exc()) # if you want full stack

return Decision(action="UNKNOWN", reason="router_error", args=None)

Honestly, the LangFlow part is pretty straightforward—think old-school: input → process → output.

- For the input, you give the prompt (tell the LLM exactly what you want) and pick the model to use.

- In the process, you can keep it simple or add a chain of tools if you need more power.

- The output is just the LLM’s result—but it helps to tell the model up front what format you expect, so the output lands clean.

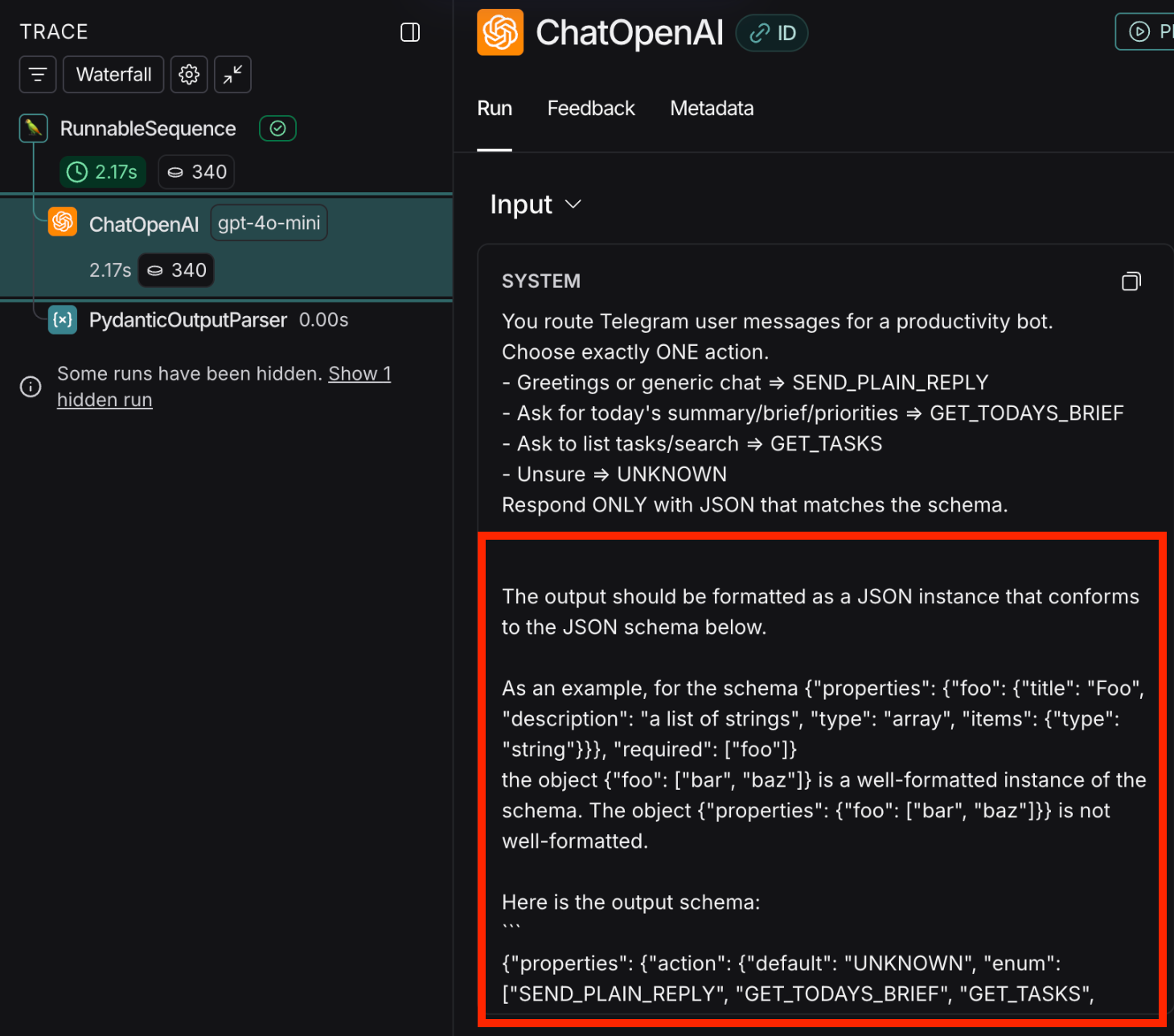

That’s basically it. The only surprise: when I checked LangSmith, I saw inputs in the final prompt that I never typed. 🤔

Sometimes LangChain quietly fattens your prompt. It can slip in a system message (“be helpful…”), wrap your text with a template, carry over memory/history, or add tool hints. So we open LangSmith and think, “Wait, where did that extra stuff come from?”

In this case, the culprit isn’t hidden magic—it’s our line of code:

prompt = prompt.partial(format_instructions=parser.get_format_instructions())

Our template has {format_instructions} in it. That function call pre-fills that slot with a big block of rules the parser needs (usually a JSON schema and “output exactly like this” text). So when we look at the final prompt in LangSmith, we see extra instructions—not because LangChain invented them, but because we injected them with .partial(...).

That’s one of the real benefits of LangSmith. Under the hood, LangChain handles a lot of the complexity an LLM needs, but without visibility it can feel like a black box. LangSmith lets you see what’s actually happening—and it tracks tokens and cost per prompt. Super helpful. It saves you from building something for weeks or months only to realize later it isn’t commercially viable.

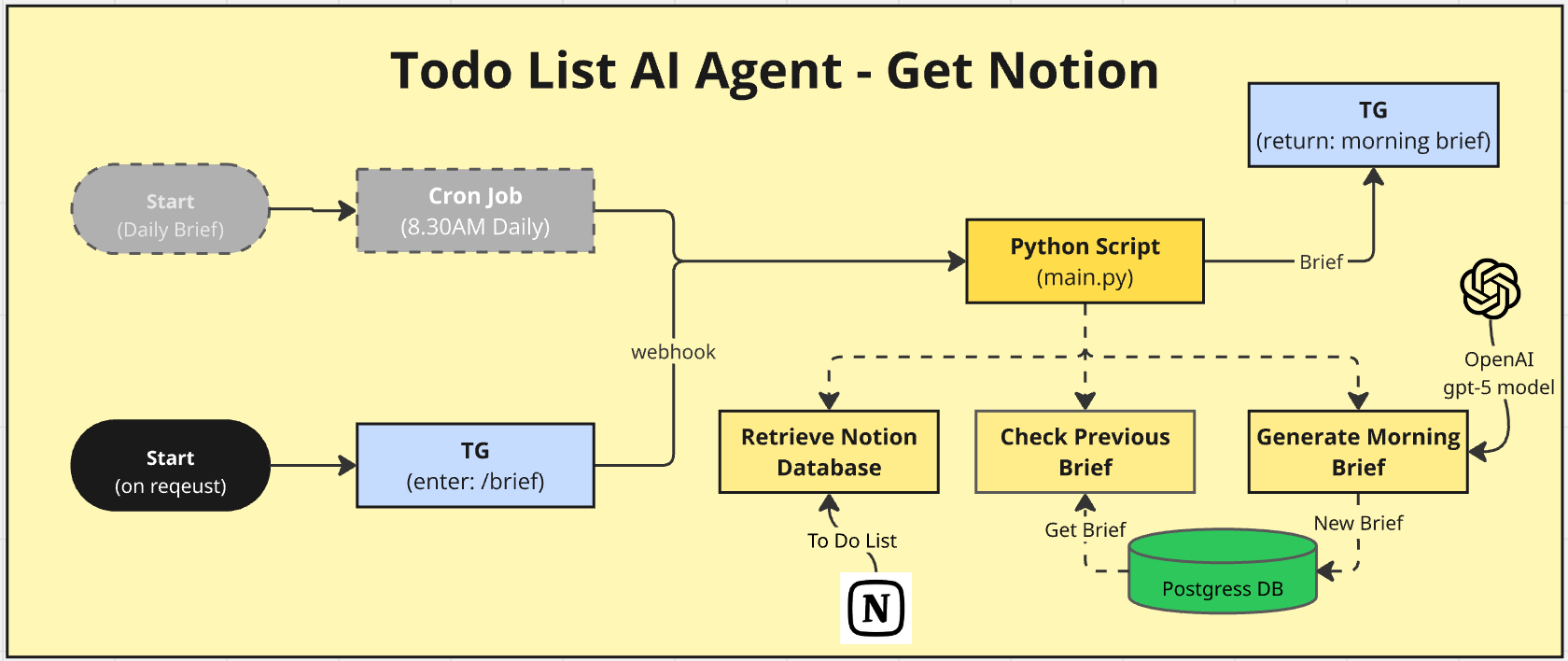

Get Notion Item & Generate Morning Brief

Now that routing works, let’s focus on getting my to-do list in Notion. Here is the summary:

- After the

deciderfigures out the chat is about a “today brief.” - It routes the request to

get_todays_brief(...). - That function pulls fresh tasks from Notion, buckets them (past due, due today, completed, idle), and applies memory rules (snoozes, nudges, workday ordering).

- It then generates a concise summary (Markdown) and sends it back on Telegram.

- Finally, it logs an audit trail (brief + items) and updates lightweight memory (seen/recommended/celebrated, nags, timestamps).

Here is the code:

# app/agents/tools.py

from __future__ import annotations

import json

from datetime import datetime, timedelta, date, timezone

from typing import Dict, List, Optional, Tuple

import httpx

from notion_client import Client as NotionClient

from pydantic import BaseModel

from sqlalchemy import (

Column, Integer, BigInteger, String, DateTime, Text, ForeignKey, select, func

)

from sqlalchemy.dialects.postgresql import JSONB

from sqlalchemy.ext.asyncio import create_async_engine, async_sessionmaker

from sqlalchemy.orm import declarative_base

from app.config import settings

# --------- tiny JSON logger ----------

def jlog(**k): print(json.dumps(k, ensure_ascii=False), flush=True)

# --------- DB setup ----------

Base = declarative_base()

_engine = create_async_engine(str(settings.DATABASE_URL), future=True, echo=False)

Session = async_sessionmaker(_engine, expire_on_commit=False)

# --- tables mapped to your schema ---

class TgLink(Base):

__tablename__ = "tg_links"

tg_user_id = Column(BigInteger, primary_key=True)

user_id = Column(Integer, nullable=False)

created_at = Column(DateTime(timezone=True))

class TaskMeta(Base):

__tablename__ = "task_meta"

notion_page_id = Column(String, primary_key=True)

first_seen_at = Column(DateTime(timezone=True))

last_seen_at = Column(DateTime(timezone=True))

last_recommended_at = Column(DateTime(timezone=True))

last_celebrated_at = Column(DateTime(timezone=True))

snoozed_until = Column(DateTime(timezone=True))

nags = Column(Integer, default=0)

meta = Column(JSONB)

class Brief(Base):

__tablename__ = "briefs"

id = Column(Integer, primary_key=True, autoincrement=True)

user_id = Column(Integer, nullable=False)

kind = Column(String) # e.g., 'daily'

sent_at = Column(DateTime(timezone=True), server_default=func.now())

summary = Column(Text) # final brief markdown

meta = Column(JSONB) # extra stats

class BriefItem(Base):

__tablename__ = "brief_items"

id = Column(Integer, primary_key=True, autoincrement=True)

brief_id = Column(Integer, ForeignKey("briefs.id", ondelete="CASCADE"), nullable=False)

notion_page_id = Column(String, nullable=False)

role = Column(String, nullable=False) # 'due_today' | 'past_due' | 'completed' | 'idle_nudge'

score = Column(Integer)

due_at = Column(DateTime(timezone=True))

snapshot = Column(JSONB)

created_at = Column(DateTime(timezone=True), server_default=func.now())

# --------- helpers ----------

def _tz_now():

try:

import pytz

tz = pytz.timezone(getattr(settings, "TZ", "Asia/Bangkok"))

return datetime.now(tz)

except Exception:

return datetime.now(timezone.utc)

def _ensure_notion() -> NotionClient:

if not settings.NOTION_TOKEN or not settings.NOTION_TASKS_DB_ID:

raise RuntimeError("NOTION_TOKEN / NOTION_TASKS_DB_ID not configured")

return NotionClient(auth=settings.NOTION_TOKEN)

async def _resolve_user_id_from_chat(chat_id: int) -> int:

async with Session() as s:

row = (await s.execute(select(TgLink).where(TgLink.tg_user_id == chat_id))).scalars().first()

return row.user_id if row else 0

async def _touch_seen(notion_page_id: str):

now = datetime.now(timezone.utc)

async with Session.begin() as s:

row = (await s.execute(select(TaskMeta).where(TaskMeta.notion_page_id == notion_page_id))).scalars().first()

if row:

row.last_seen_at = now

if not row.first_seen_at: row.first_seen_at = now

else:

s.add(TaskMeta(notion_page_id=notion_page_id, first_seen_at=now, last_seen_at=now, nags=0))

def _is_snoozed(meta: Optional[TaskMeta]) -> bool:

return bool(meta and meta.snoozed_until and meta.snoozed_until > datetime.now(timezone.utc))

def _is_idle(last_edited_iso: Optional[str], cutoff_hours: int) -> bool:

if not last_edited_iso:

return True

try:

when = datetime.fromisoformat(last_edited_iso.replace("Z", "+00:00"))

except Exception:

return True

return (datetime.now(timezone.utc) - when) > timedelta(hours=cutoff_hours)

async def snooze_task(notion_page_id: str, hours: int = 24):

async with Session.begin() as s:

row = (await s.execute(select(TaskMeta).where(TaskMeta.notion_page_id == notion_page_id))).scalars().first()

until = datetime.now(timezone.utc) + timedelta(hours=hours)

if row:

row.snoozed_until = until

else:

s.add(TaskMeta(notion_page_id=notion_page_id, snoozed_until=until, nags=0))

# --------- Notion extraction ----------

def _extract_task(task: Dict) -> Dict:

p = task.get("properties", {})

title = ""

if p.get("Task", {}).get("title"):

title = p["Task"]["title"][0]["text"]["content"]

area = p.get("Area", {}).get("select", {}).get("name") or "No Area"

score = p.get("Competition Score", {}).get("number") or 0

priority = p.get("Priority", {}).get("select", {}).get("name") or "🟢"

status = p.get("Status", {}).get("select", {}).get("name") or "🎯 Not Started"

due_start = p.get("Due", {}).get("date", {}).get("start")

return {

"id": task.get("id"),

"name": title or "Untitled",

"area": area,

"score": score,

"priority": priority,

"status": status,

"due_start": due_start,

"last_edited_time": task.get("last_edited_time"),

"raw": task, # for snapshot save

}

def _prioritize_work(tasks: List[Dict], work_first: bool) -> List[Dict]:

if not work_first:

return sorted(tasks, key=lambda x: x.get("score", 0), reverse=True)

work = [t for t in tasks if (t.get("area") or "").lower() == "work"]

other = [t for t in tasks if (t.get("area") or "").lower() != "work"]

work.sort(key=lambda x: x.get("score", 0), reverse=True)

other.sort(key=lambda x: x.get("score", 0), reverse=True)

return work + other

#--- ADD: mark when we recommended/nudged a task in a brief

async def _mark_recommended(notion_page_id: str):

now = datetime.now(timezone.utc)

async with Session.begin() as s:

row = (

await s.execute(

select(TaskMeta).where(TaskMeta.notion_page_id == notion_page_id)

)

).scalars().first()

if row:

row.last_recommended_at = now

row.nags = (row.nags or 0) + 1

else:

# create if not seen yet

s.add(

TaskMeta(

notion_page_id=notion_page_id,

first_seen_at=now,

last_seen_at=now,

last_recommended_at=now,

nags=1,

)

)

#--- ADD: mark when we celebrated a win

async def _mark_celebrated(notion_page_id: str):

now = datetime.now(timezone.utc)

async with Session.begin() as s:

row = (

await s.execute(

select(TaskMeta).where(TaskMeta.notion_page_id == notion_page_id)

)

).scalars().first()

if row:

row.last_celebrated_at = now

else:

s.add(

TaskMeta(

notion_page_id=notion_page_id,

first_seen_at=now,

last_seen_at=now,

last_celebrated_at=now,

nags=0,

)

)

# --------- PUBLIC: build + persist brief ----------

async def get_todays_brief(user_id_from_chat: int) -> str:

"""

- Fetch Notion tasks (due today or past due)

- Apply snooze from task_meta; detect idle

- Generate brief via Anthropic/OpenAI using your prompt

- Persist to briefs + brief_items (with snapshots)

"""

notion = _ensure_notion()

now = _tz_now()

today_s = now.date().strftime("%Y-%m-%d")

is_working_day = now.weekday() < 5

work_first = is_working_day and now.hour < 17

# map TG chat_id -> internal user_id (if mapping exists)

user_id = await _resolve_user_id_from_chat(user_id_from_chat)

# fetch

query = {

"filter": {"or": [

{"property": "Due", "date": {"equals": today_s}},

{"property": "Due", "date": {"before": today_s}},

]},

"sorts": [

{"property": "Due", "direction": "ascending"},

{"property": "Competition Score", "direction": "descending"},

],

"page_size": 100,

}

try:

res = notion.databases.query(database_id=settings.NOTION_TASKS_DB_ID, **query)

except Exception as e:

jlog(event="notion_error", error=str(e))

return "I couldn’t fetch today’s brief. Check Notion token, DB ID, or property names."

raw_tasks = res.get("results", [])

tasks = [_extract_task(t) for t in raw_tasks]

# load task_meta map

async with Session() as s:

metas = (await s.execute(select(TaskMeta).where(TaskMeta.notion_page_id.in_([t["id"] for t in tasks])))).scalars().all()

meta_map = {m.notion_page_id: m for m in metas}

today_d = now.date()

past_due, due_today, completed = [], [], []

idle_cutoff_h = getattr(settings, "pa_base_cooldown_hours", 36)

idle_flags = []

for t in tasks:

await _touch_seen(t["id"])

status = t.get("status", "")

if any(k in status for k in ("Done", "Complete", "✅")):

completed.append(t); continue

# snooze filter

if _is_snoozed(meta_map.get(t["id"])):

continue

# due bucket

due_d = today_d

if t["due_start"]:

try: due_d = datetime.fromisoformat(t["due_start"].replace("Z","+00:00")).date()

except Exception: pass

(past_due if due_d < today_d else due_today).append(t)

# idle marker

if _is_idle(t.get("last_edited_time"), idle_cutoff_h):

idle_flags.append({"id": t["id"], "name": t["name"], "area": t["area"]})

past_due = _prioritize_work(past_due, work_first)

due_today = _prioritize_work(due_today, work_first)

# simple counts log (helps when checking docker logs)

jlog(

event="brief_buckets",

counts=dict(

due_today=len(due_today),

past_due=len(past_due),

completed=len(completed),

idle_nudges=len(idle_flags),

),

)

# --- WRITE MEMORY: mark recommended & celebrated ---

for t in (due_today + past_due + idle_flags):

await _mark_recommended(t["id"])

for t in completed:

await _mark_celebrated(t["id"])

# build the LLM input ONCE

task_data = {

"current_date": now.strftime("%A, %B %d, %Y"),

"current_time": now.strftime("%I:%M %p"),

"is_working_day": is_working_day,

"current_hour": now.hour,

"past_due_tasks": past_due,

"due_today_tasks": due_today,

"completed_tasks": completed,

"idle_nudges": idle_flags,

}

# generate text ONCE

brief_md = await _generate_brief_with_prompt(task_data)

# persist brief + items (with snapshots)

await _persist_brief_and_items(

user_id=user_id,

kind="daily",

summary=brief_md,

buckets={

"due_today": due_today,

"past_due": past_due,

"completed": completed,

"idle_nudge": idle_flags,

}

)

return brief_md

async def _persist_brief_and_items(

user_id: int,

kind: str,

summary: str,

buckets: Dict[str, List[Dict]]

):

async with Session.begin() as s:

b = Brief(user_id=user_id, kind=kind, summary=summary, meta={"counts": {k: len(v) for k, v in buckets.items()}})

s.add(b)

# we need the id

async with Session() as s:

b_id = (await s.execute(select(Brief.id).order_by(Brief.id.desc()))).scalars().first()

# insert items

to_insert = []

for role, items in buckets.items():

for t in items:

due_at = None

if t.get("due_start"):

try: due_at = datetime.fromisoformat(t["due_start"].replace("Z","+00:00"))

except Exception: pass

snap = t.get("raw") if role != "idle_nudge" else t # idle_nudge is already a small dict

to_insert.append(BriefItem(

brief_id=b_id,

notion_page_id=t["id"],

role=role,

score=int(t.get("score", 0)),

due_at=due_at,

snapshot=snap,

))

if to_insert:

async with Session.begin() as s:

s.add_all(to_insert)

# --------- Prompt + LLM calls (your old style, adapted) ----------

async def _generate_brief_with_prompt(task_data: Dict) -> str:

now = _tz_now()

is_working_day = now.weekday() < 5

hour = now.hour

if is_working_day:

sched = "**WORK HOURS:** Prioritize 'Work' area tasks. Personal tasks after 5PM." if hour < 17 else "**AFTER WORK:** Personal tasks OK."

else:

sched = "**WEEKEND:** Full flexibility on all task areas."

idle_hint = ""

if task_data.get("idle_nudges"):

names = ", ".join(t["name"] for t in task_data["idle_nudges"][:5])

idle_hint = f"\n**IDLE / STALE:** Consider nudging or snoozing: {names}"

prompt = f"""

You are a senior productivity strategist analyzing daily tasks for a multi-faceted professional. Provide a concise but insightful morning briefing.

**CONTEXT:**

- IT and Data Lead at major bank (strategic, analytical work)

- abc.com entrepreneur (creative, business development)

- AI automation builder (technical, systematic thinking)

- Cat parent to rescued kittens (caring, responsible)

- Uses competition scoring (1-10) and Eisenhower Matrix prioritization

- Strengths: Ideation, Competition, Achiever, Arranger

**SCHEDULE AWARENESS:**

{sched}{idle_hint}

**TODAY'S DATA (JSON):**

{json.dumps(task_data, indent=2)}

**BRIEFING REQUEST:**

Create a strategic morning briefing with:

1. **🎯 Daily Focus** (1-2 sentences)

2. **✅ Completed Tasks** (celebrate wins)

3. **⚡ Execution Plan** (ordered steps; respect schedule constraints)

4. **🧠 Strategic Insights** (energy/workflow tactics; reference competition scores)

5. **🏆 Achievement Momentum** (compound recent wins)

6. **💡 Tactical Tip** (one specific actionable insight)

7. **🔔 Suggested Nudges** (use `idle_nudges` to suggest pings; suggest snoozes if not today)

**RULES:**

- Work days before 5PM: Work-first; after 5PM: personal OK; weekends: flexible.

- Keep under 350 words, markdown for Telegram, bullet-friendly.

**OUTPUT FORMAT:** Pure markdown text, no extra preamble.

"""

# Prefer Anthropic; fallback OpenAI

if getattr(settings, "anthropic_api_key", None):

try:

return await _claude_call(prompt)

except Exception as e:

jlog(event="anthropic_error", error=str(e))

return await _openai_call(prompt)

async def _claude_call(prompt: str) -> str:

headers = {"Content-Type": "application/json",

"X-API-Key": settings.anthropic_api_key,

"anthropic-version": "2023-06-01"}

payload = {"model": getattr(settings, "anthropic_model", "claude-3-5-sonnet-20241022"),

"max_tokens": 1200,

"messages": [{"role": "user", "content": prompt}]}

async with httpx.AsyncClient(timeout=60) as c:

r = await c.post("https://api.anthropic.com/v1/messages", headers=headers, json=payload)

r.raise_for_status()

data = r.json()

return data["content"][0]["text"]

async def _openai_call(prompt: str) -> str:

api_key = getattr(settings, "OPENAI_API_KEY", None)

model = getattr(settings, "llm_model", "gpt-4o-mini")

if not api_key:

return "LLM is not configured; please set OPENAI_API_KEY or ANTHROPIC_API_KEY."

async with httpx.AsyncClient(timeout=60) as c:

r = await c.post("https://api.openai.com/v1/chat/completions",

headers={"Authorization": f"Bearer {api_key}"},

json={"model": model, "temperature": 0.4,

"messages": [{"role": "user", "content": prompt}]})

r.raise_for_status()

data = r.json()

return data["choices"][0]["message"]["content"]That quite long but in super high level summary:

- A helper module that glues Telegram → Notion → LLM → Telegram.

- It knows your DB tables, talks to Notion, and asks an LLM to write a short brief.

The main thing happening in here: get_todays_brief(chat_id)

- Figure out who’s asking (TG chat → user id).

- Fetch tasks from Notion that are due today or earlier, sorted by Due date then Score.

- Parse them into simple dicts (name, area, score, status, due, last_edited).

- Bucket them:

- skip snoozed

- split into past_due, due_today, completed

- mark idle if untouched ~36h

- (workday before 5pm? put Work first)

- Update memory:

- recommended items →

last_recommended_at,nags += 1 - completed →

last_celebrated_at - everything seen →

last_seen_at(andfirst_seen_atif new)

- recommended items →

- Ask an LLM (Claude first, OpenAI fallback) to write a short Markdown morning brief using your schedule rules and those buckets.

- Save an audit trail:

- one row in

briefs(the brief text + counts) - one row per task in

brief_items(snapshot for later review)

- one row in

- Return the Markdown (so your Telegram handler can send it back).

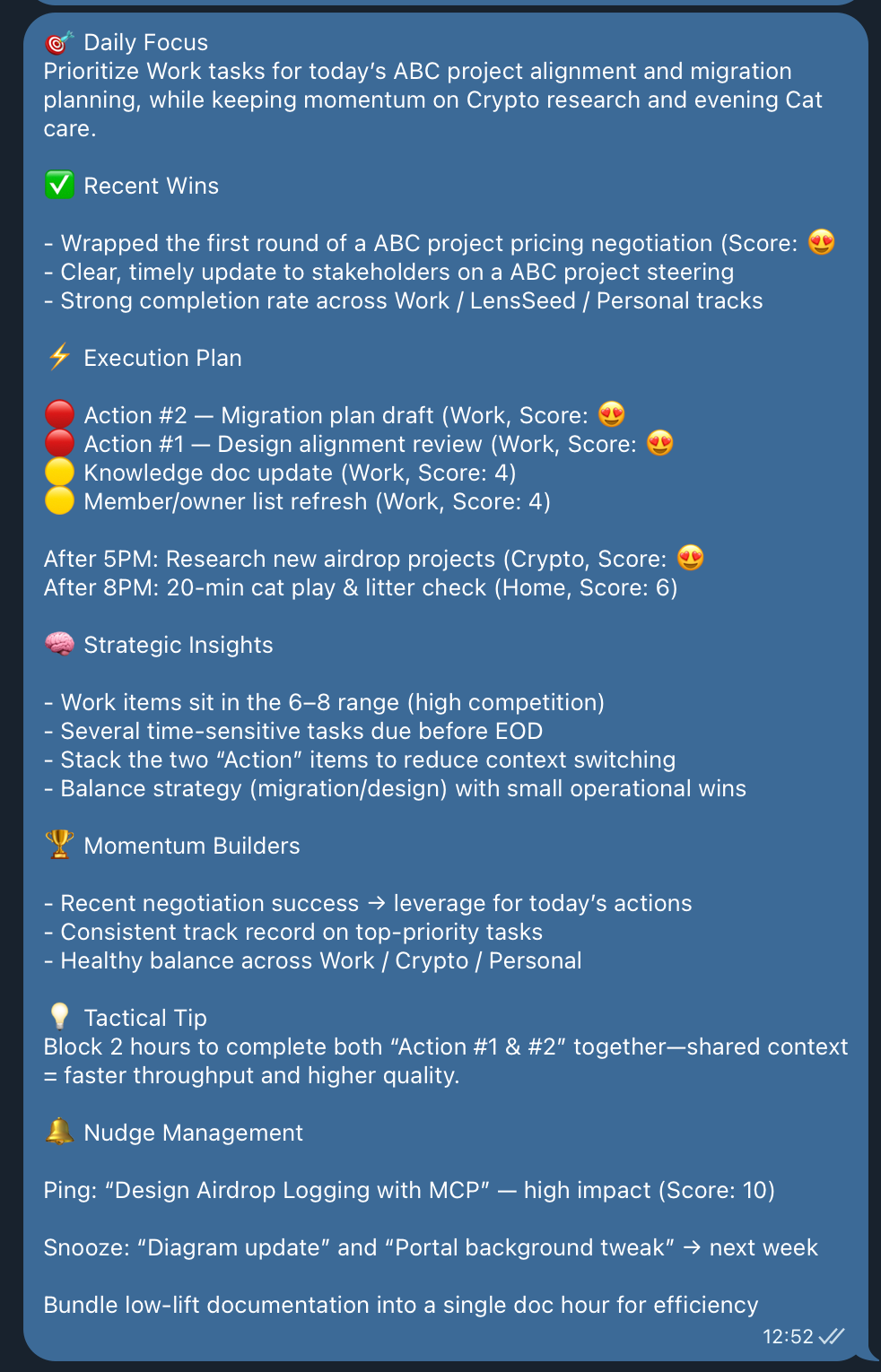

Here is the output (REDACT) for my morning brief:

That’s it for Episode 1. I suggest you follow these steps side-by-side with ChatGPT. If you have patient reading till here, I strongly believe you can do it.

My trick: we must understand the structure and flow—you don’t need to know every line of code. You set the architecture and logic; let AI do the heavy coding task. Keep a simple doc—plain words or a logic diagram—as your north star.

One caveat: AI memory is still limited. It’s much better than two years ago, but it won’t reliably remind you what to continue after sleep or a long break. Without your north star, you’ll lose direction easily.

Thank you for reading.